When AI Lies: What is AI Hallucination and How to Spot It

If you’ve ever asked an AI chatbot a question and got a confident answer — only to find out it was completely wrong — you’ve already experienced an AI hallucination.

It looks real. It sounds right. It flows like something a smart person would say. But it’s not true.

As more people rely on AI tools to write emails, summarise news, generate reports, or even draft legal documents, this issue becomes more than a glitch — it becomes a serious risk. And the worst part? You might not know it’s wrong unless you double-check.

That’s why understanding what AI hallucination is, why it happens, and how to handle it is not just helpful — it’s necessary.

What Is AI Hallucination? (Plain Explanation)

AI hallucination sounds technical, but it’s simple to understand.

It means this: the AI creates something that sounds factual, but isn’t. The system isn’t intentionally lying. It’s just filling in blanks based on patterns, not facts.

Let’s say you ask an AI to summarise a research paper or explain an event in history. It gives you a very confident response, but it might insert dates, names, quotes, or statistics that never appeared in the original source.

It doesn’t do this out of bad intent. The model doesn’t know the truth. It only knows patterns of language. If enough similar examples in its training data contain certain phrases or formats, the AI assumes the next words in your prompt should look similar, even if they’re made up.

This false content is what we call a hallucination.

Why AI Hallucination Happens — Broken Down Simply

Here’s what’s going on under the surface.

AI language models — like ChatGPT, Gemini, or Claude — are built to predict the next word in a sentence. They do not “know” facts. They do not check real-time sources unless connected to the web. They simply generate text based on probability.

For example, if you ask, “Who discovered the moon’s magnetic field in 1969?” and no such event is clearly documented in its training data, the AI might guess. It might say, “Dr. James Halston discovered the magnetic field of the moon in 1969 during the Apollo 12 mission.”

Sounds legit, right? But it’s entirely fictional. There is no Dr. Halston. There was no magnetic field discovery in that context. It’s a well-worded error — and that’s the danger.

AI fills in the gaps when:

Your question is too specific

The answer doesn’t exist in its training data

It was trained on outdated or incomplete information

You ask for something that sounds like it should be real

It’s like asking someone to tell a story they’ve only overheard — they’ll try, but it might be part truth and part fiction.

Real-World Examples of AI Hallucination

In order to see that hallucinations may be quite serious, it is possible to consider some of the cases when they have confused, made mistakes or even got people into legal trouble.

A Fake Citation in a Research Summary

A university student used an AI tool to help summarise a scientific paper and asked it to list the sources. The AI confidently listed five references — complete with author names, journal titles, and issue numbers.

Only problem? Three of those sources didn’t exist. The AI generated fake citations that looked real. The student nearly submitted them without checking.

Wrong Details in a Biography

Someone used AI to write a biography for a website. The AI included awards the person had never won and an educational background that didn’t match reality. Everything was presented fluently, but it was fiction dressed as fact.

AI-Generated Legal Cases

In a widely reported case, a lawyer submitted legal arguments written with the help of an AI tool. The AI included fake case references — legal decisions that had never happened. The lawyer assumed they were real and didn’t verify them.

The result? The judge found out, the case was dismissed, and the lawyer faced professional consequences.

Business Reports With Invented Data

An entrepreneur asked an AI to draft a market analysis for investors. The report included statistics and competitor info, but much of it was outdated, misrepresented, or fabricated. The AI didn’t mean to lie — it just wanted the report to sound complete.

Why Hallucination Can Be Dangerous

Some people shrug it off. “It’s just a small error.” But in real-world use, AI hallucinations can cause real problems.

It Spreads Misinformation

False data can be shared, re-posted, or quoted as fact, especially if it sounds confident. Over time, errors compound and trust is broken.

It Damages Credibility

If you publish something with fake quotes, bad stats, or made-up history, your audience might lose confidence in your work, even if you didn’t realise the info was false.

It Has Legal and Ethical Risks

In law, medicine, finance, or education, using incorrect information from an AI model could lead to fines, lawsuits, or public backlash.

It Confuses Users Who Assume AI = Authority

Many people assume AI is “smarter than them.” They see the confidence in the language and believe it must be right. That’s a dangerous assumption — and a habit we need to change.

How to Tell When AI Might Be Hallucinating

AI doesn’t always warn you when it’s unsure. That means you have to watch for clues. Here’s how to spot content that might not be real.

It Sounds Overconfident Without Evidence

AI tends to say things clearly — even when it doesn’t “know.” If it gives you a list of facts or steps without any hesitation or sourcing, that’s a sign to double-check.

Example:

“According to Harvard’s 2018 study, people who nap for 14 minutes improve memory by 30%.”

Sounds great — but there’s no such Harvard study. It was made up.

The Details Seem Too Perfect

If an AI gives a full name, company, date, and quote that all sound too clean, pause. These “polished facts” are often created by combining unrelated pieces of text.

Example:

“Dr. Helen Morton of Stanford said in 2003, ‘Creativity is just memory in disguise.’”

It looks real. But she may not exist — and the quote may be fictional.

The Sources Don’t Exist (or Don’t Match)

Ask the AI to show its sources. Copy the titles into Google. If nothing comes up, or if the article exists but doesn’t say what the AI claimed, that’s a hallucination.

Check:

- Article titles

- Author names

- Book ISBNs

- Case numbers (in legal texts)

- DOI links for academic journals

The AI Doesn’t Handle Follow-Up Questions Well

If you press it with:

“Where did you get this information?”

“Can you link the original source?”

“Who said that quote?”

… and the AI starts backtracking or gives vague replies — it likely hallucinated.

How to Reduce the Risk of AI Hallucination in Your Work

You can’t stop hallucinations completely, but you can limit how often they happen. And when they do, you can catch them faster.

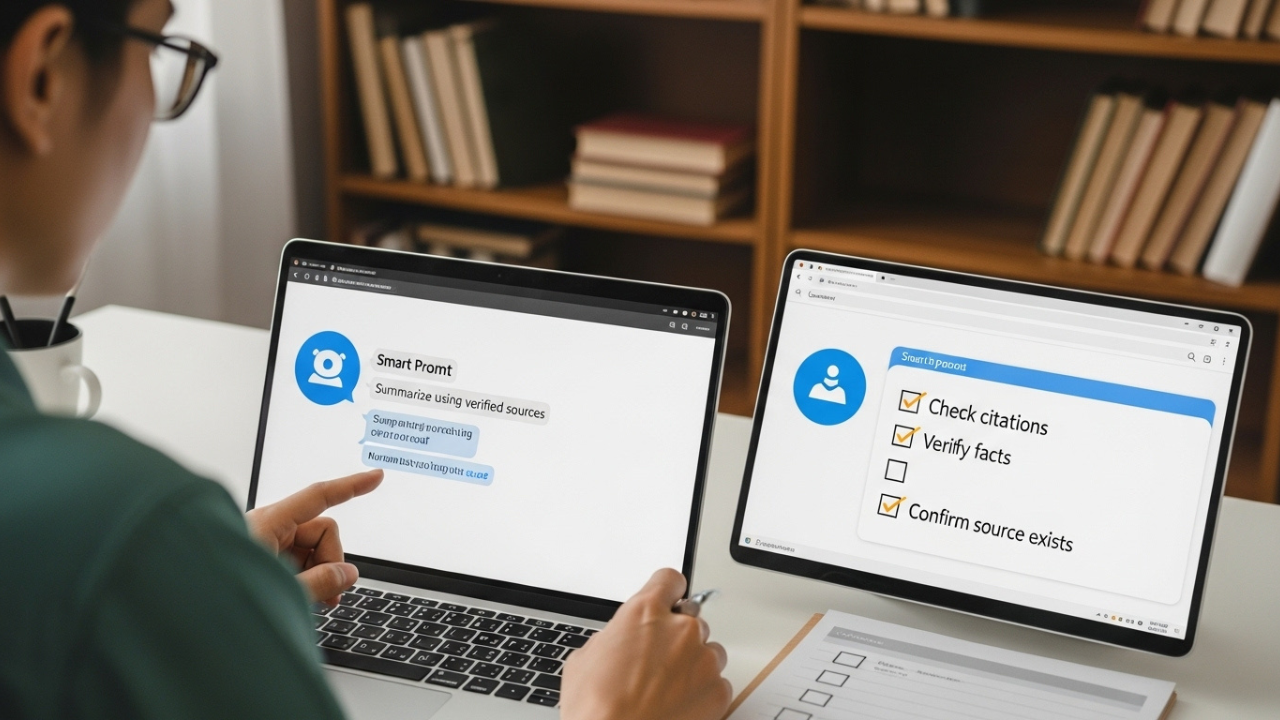

Use Smarter Prompts That Give AI Clear Direction

Instead of asking:

“Summarize the history of the Internet.”

Try:

“Summarize the history of the Internet using only confirmed public sources. If unsure, say you don’t know.”

This teaches the model to hesitate when needed, which lowers the risk of hallucination.

Ask for Citations and Then Check Them

Some tools generate references on command. But not all are real. After the AI gives sources:

Search for each one

Make sure the content exists and matches the claim

Never copy citations directly into academic work without checking

Use AI for Drafting, Not Final Information

Let AI organise, outline, or reword — not verify facts.

Good use:

“Draft a headline for a blog about sleep habits.”

Bad use:

“Tell me the latest sleep research with citations.”

Use AI to brainstorm. Use trusted sources to confirm.

Choose Tools with Live Data (When Accuracy Matters)

Some AI tools — like Perplexity AI, You.com, or ChatGPT with plugins — can pull information from real-time search.

They’re better for:

- News

- Market trends

- Scientific findings

- Anything that changes quickly

Language-only tools (without web access) are more likely to hallucinate facts.

My Opinion| AI Isn’t Dangerous — Until You Stop Thinking

AI is a helpful tool. It will help you save time, systematise your ideas, and evoke ideas. But it was never meant to be a source of truth on its own.

Use it to think with you and not about you.

When you do it, i.e. when you do not go out of the loop, when you double-check your facts, when you continue asking questions, then AI becomes an amazing assistant.

Hallucinations don’t have to ruin your work. They just remind you: you are still the human in the room.